Trusted by security leaders to secure their cloud

Cole Horsman

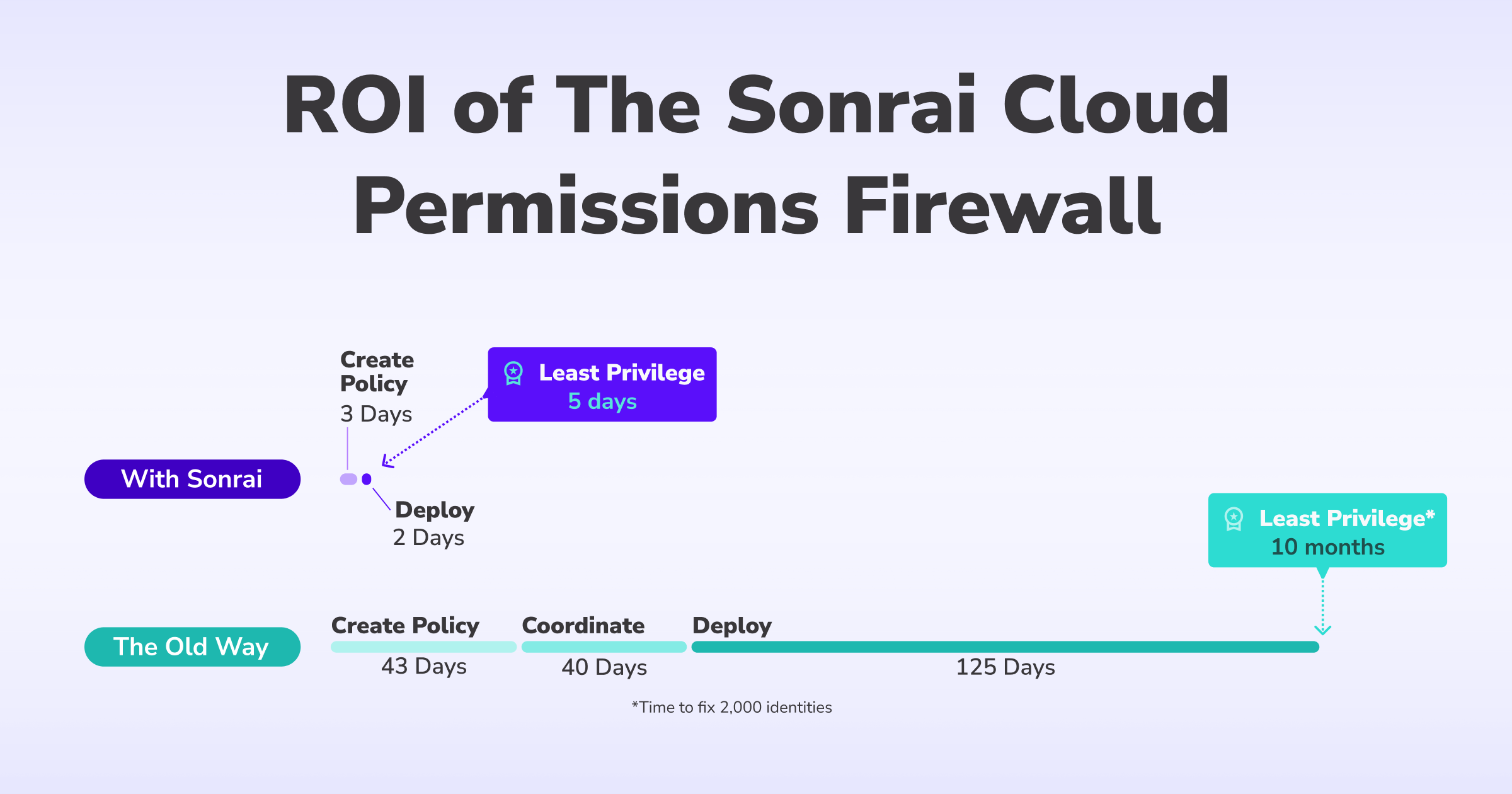

AVP, Security Operations“Sonrai helped us do in days what would’ve taken months—automating identity management and achieving least privilege across AWS.”

Preetam Sirur

Chief InformationSecurity Officer

“The challenge with deleting unused identities or enforcing least privilege is that we know it’s the ‘right’ thing to do, but everyone’s afraid it’ll break something or interrupt our development cycles. We don’t have to worry anymore.”

Brendan Putek

Director of DevOps“Within five minutes I had disabled regions that were unused across my entire AWS organization.”

Kenneth Milcetich

Director of Cyber and InfoSec“Sonrai not only identified the over permissive actions granted to our identities, but also provides a least effective access policy based on the identities usage...All of this boils down to a significant increase in our cloud security posture.”

Josh McLean

Chief Information Officer“Our transition from tedious, weeks-long tasks to accomplishing Least Privilege outcomes in just a few days has been remarkable. This approach has saved us a tremendous amount of time while also guaranteeing the security of all critical permissions.”

Chad Lorenc

Security Delivery Manager“Sonrai is one of the top tools to quickly scale when you're trying to do privileged management in the cloud.”

See Sonrai Security in Action

Watch a recorded demo or get a personalized demo to see how Sonrai Security can secure identities and entitlements across your entire public cloud, including Amazon Web Services (AWS), Azure, GCP and OCI.